If you are trying to bulk load the following data from a text file. (1st Row is the Column Header)

ID,Ename,City

1,Hamed,Hyderabad

2,Bill,Medina

3,Steve,Redmond

Issue:

Bulk load data conversion error (type mismatch or invalid character for the specified codepage) for row 1, column 2

Resolution:

Make Sure in the Bulk Insert Task Editor under "Options" tab the First Row is 2, 1 is the default. This will avoid loading of Column headers

Other issues might be with the Text Qualifer or the Source file is a Unicode. For first case, make sure the Flat File Connection Manager takes care of it for the other issue, do a conversion to DT_STR or make the destination table columns as nchar (if char) or nvarchar (if varchar)

Tuesday, July 28, 2009

Wednesday, July 8, 2009

SSIS: Handling different row types in the same file

Problem Description:

Suppose you have a file row with data something like this...

E001 Steve Bellevue

C002 Bill Medina

Where Index 0 is the Type of Record where C = Chairman or E = CEO

Where 1 to 3 is the employee ID

Where 5 to 9 is the Name of the employee

Where 10 to 20 is the Address

And if there is requirement to distribute the data based on the Record Type then use the following Solution...

Solution:

Thanks for the Post by Allan Mitchell, the following solution helps to resolve the problem described above.

Sometimes source systems like to send us text files that are a little out of the ordinary. More and more frequently we are seeing people being sent master and detail or parent and child rows in the same file. Handling this in DTS is painful. In SSIS that changes. In this article we'll take you through one file of this type and provide a solution which is more elegant than what we have today, and hopefully will give you ideas as to how you can handle your own versions of this type of file. As mentioned this is a simple solution but we could extend it to include more powerful processing techniques like performing lookups to see if we already have in our destination any of the rows coming through the pipeline etc. This is how our package should look when everything is finished.

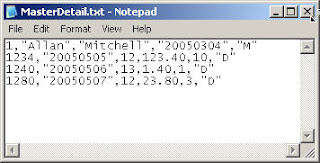

The graphic below shows us the text file sent to us by our source system. As you can see the last column is the type of row so a "M" indicates a master row and a "D" indicates a detail row. In this example there is one master row and three detail rows.

We create a flat file connection manager that points to this file. We are not going to split the lines in the text file up into their respective columns yet because we have two types of rows and they have varying counts of columns. Instead we are going to read in the line as one string. This is how we set up the connection manager to do that.

Once we've done that we add a DataFlow task to our package and in the dataflow behind the task we add a Flat File Source Adapter which points to our earlier connection manager. The only thing we're going to change is the name of the ouput column, as shown here.

After doing that we are going to flow the output into a Conditional Split Transform. We will use this transform to interpret the line type through the use of the final column in the text file and direct the row down the correct output. With the Conditional Split transform in our example we will have three possible outputs flowing downstream. The first two are based on whether we have decided the row is a Master row or a Detail row and the third is one that we get for free and this is where all other rows that do not meet our criteria will flow.

So we've split up our rows and assigned them to the correct outputs we now need to hook them up to a Script Transform to break the line apart and create our real columns from the one line. When you drag an output from the Conditional Split Transform to the Script Transform you will be asked which output you would like to attach. In the Script Transform the first thing we are going to do is add some columns to the Output. When the data has flowed through this component, downstream components will see these columns and it will be easier for the package designer to assign them to their correct destinations. Here's how we do that.

We are only going to look at the script transform that handles the rows flowing down the master row output, but the code used can easily be adapted to also handle the rows flowing through the detail row output. We now have one column coming into this transform and we have a number of columns we have just created ready to take data from this column so let's write some script to do that.

All this code is doing is taking the column that comes in (TheWholeLineColumn) and splitting it up based on a comma delimiter. We then assign elements in the created array out to our ouput columns. All that is left now is to hook up our output from the script transform to a destination and for ease of use we have simply pointed the output to a Raw File Destination Adapter. We have added a dataviewer to the flow of data going into the Script transform so we can see the definition of the row going in. You can see this in the graphic below.

Finally, all that is left for us to do is run the package and watch the rows flow down the pipeline. What we should see is that one row flows to the master output and three rows flow down to the details output

The graphic below shows us the text file sent to us by our source system. As you can see the last column is the type of row so a "M" indicates a master row and a "D" indicates a detail row. In this example there is one master row and three detail rows.

We create a flat file connection manager that points to this file. We are not going to split the lines in the text file up into their respective columns yet because we have two types of rows and they have varying counts of columns. Instead we are going to read in the line as one string. This is how we set up the connection manager to do that.

Once we've done that we add a DataFlow task to our package and in the dataflow behind the task we add a Flat File Source Adapter which points to our earlier connection manager. The only thing we're going to change is the name of the ouput column, as shown here.

After doing that we are going to flow the output into a Conditional Split Transform. We will use this transform to interpret the line type through the use of the final column in the text file and direct the row down the correct output. With the Conditional Split transform in our example we will have three possible outputs flowing downstream. The first two are based on whether we have decided the row is a Master row or a Detail row and the third is one that we get for free and this is where all other rows that do not meet our criteria will flow.

So we've split up our rows and assigned them to the correct outputs we now need to hook them up to a Script Transform to break the line apart and create our real columns from the one line. When you drag an output from the Conditional Split Transform to the Script Transform you will be asked which output you would like to attach. In the Script Transform the first thing we are going to do is add some columns to the Output. When the data has flowed through this component, downstream components will see these columns and it will be easier for the package designer to assign them to their correct destinations. Here's how we do that.

We are only going to look at the script transform that handles the rows flowing down the master row output, but the code used can easily be adapted to also handle the rows flowing through the detail row output. We now have one column coming into this transform and we have a number of columns we have just created ready to take data from this column so let's write some script to do that.

All this code is doing is taking the column that comes in (TheWholeLineColumn) and splitting it up based on a comma delimiter. We then assign elements in the created array out to our ouput columns. All that is left now is to hook up our output from the script transform to a destination and for ease of use we have simply pointed the output to a Raw File Destination Adapter. We have added a dataviewer to the flow of data going into the Script transform so we can see the definition of the row going in. You can see this in the graphic below.

Finally, all that is left for us to do is run the package and watch the rows flow down the pipeline. What we should see is that one row flows to the master output and three rows flow down to the details output

SSIS: Choosing a Configuration Approach for Deployment

The following are some of the sample approaches, these are not the de facto...

Recommendations for Specific Scenarios

1. If the deployment environment supports environment variables and has a SQL Server database available. If a SQL Server database is not available, then XML files with indirect configurations (based on environment variables) can be used in a very similar way.

2. If the deployment environment does not allow environment variables, but a consistent path can be provided in each environment, then XML configurations can be used. The path should be identical in each environment (for example, “C:\SSIS_Config\”), so that a local copy of the configuration file can be deployed to each environment without having to modify the path to point to a new configuration location.

3. If environment variables are not allowed, and a consistent local path cannot be used, the Parent Package Variable approach is often the best approach, as it isolates any environmental changes to the parent package.

Recommendations for Specific Scenarios

1. If the deployment environment supports environment variables and has a SQL Server database available. If a SQL Server database is not available, then XML files with indirect configurations (based on environment variables) can be used in a very similar way.

2. If the deployment environment does not allow environment variables, but a consistent path can be provided in each environment, then XML configurations can be used. The path should be identical in each environment (for example, “C:\SSIS_Config\”), so that a local copy of the configuration file can be deployed to each environment without having to modify the path to point to a new configuration location.

3. If environment variables are not allowed, and a consistent local path cannot be used, the Parent Package Variable approach is often the best approach, as it isolates any environmental changes to the parent package.

Subscribe to:

Posts (Atom)